Retrieval-Augmented Generation (RAG) enhances AI by integrating large language models (LLMs) with real-time knowledge retrieval, reducing reliance on pre-trained data and improving response accuracy.

But retrieval alone has limits. Vector-based search struggles with ambiguous context, lacks explicit reasoning, and doesn’t maintain structured knowledge over time. This affects reliability, especially in fields like healthcare, finance, and legal AI, where accuracy and transparency are critical.

Knowledge Graphs (KGs) solve part of this problem. Unlike dense vector embeddings, KGs define structured relationships, helping AI connect concepts, resolve ambiguity, and apply logic. When combined with RAG, AI can retrieve knowledge more effectively, ground responses in facts, and provide explanations—making results more reliable.

This post breaks down how RAG works, where it falls short, and how Knowledge Graphs help AI move from just retrieving information to truly understanding and applying it. Let’s get started.

First things first, what is RAG?

Retrieval-augmented generation (RAG) improves how AI finds and uses information by combining large language models (LLMs) with external knowledge retrieval. Instead of relying only on pre-trained data, RAG fetches relevant information from external sources, making responses more accurate and reducing guesswork.

This approach lowers the chances of AI generating incorrect information, improves accuracy, and strengthens reasoning by grounding responses in retrieved data.

While its reliability depends on the quality of the retrieved content and how it’s integrated, RAG makes AI more effective for tasks that require up-to-date or specialized knowledge.

Key components of RAG

RAG works by combining retrieval and generation, ensuring AI responses are informed by relevant external data. Here’s how it functions:

- Retriever – Finds relevant documents from an external knowledge source using methods like FAISS (vector search), BM25 (keyword-based), or a mix of both.

- Generator – Uses a pre-trained LLM (GPT-4, Llama, Anthropic or Gemini) to generate responses based on the retrieved documents.

- Fusion – Filters and refines retrieved content by ranking, re-weighting, or applying attention mechanisms to make the final response more accurate and relevant.

How RAG works

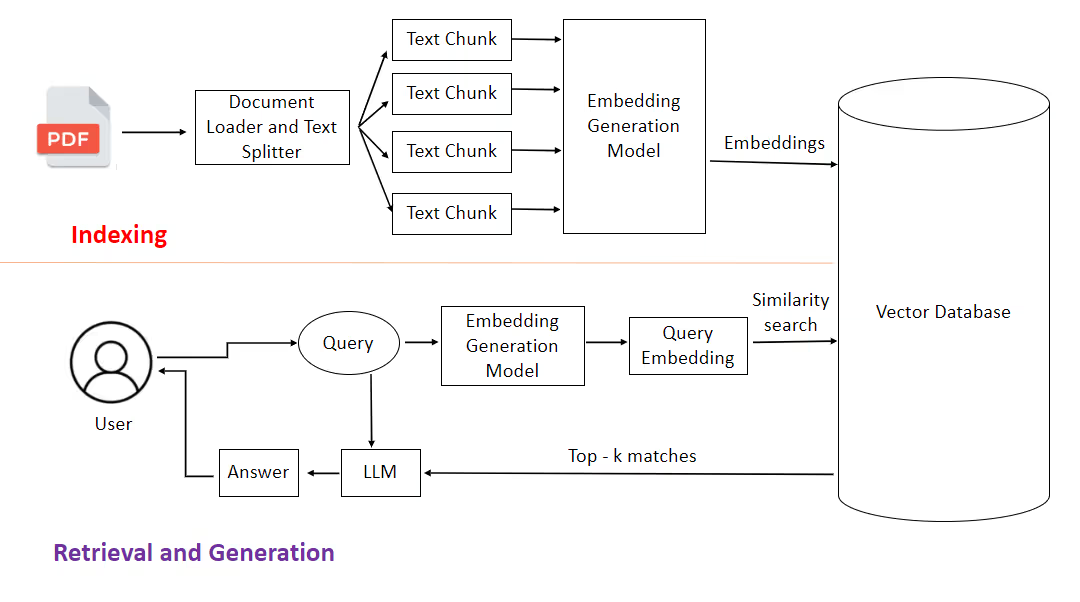

RAG improves AI responses by retrieving relevant information before generating an answer.

Here’s how it works:

- User query – The user asks a question.

- Vectorization – The query is converted into a numerical format using an embedding model (OpenAI, SentenceTransformers) so it can be compared with stored knowledge. Some systems also use keyword-based search (BM25) alongside vector embeddings.

- Retrieval – The system searches a knowledge database (FAISS, ChromaDB, or Weaviate) to find the most relevant documents.

- Augmentation – The retrieved documents are processed and formatted before being fed into the AI model as extra context.

- Response generation – The AI generates an answer by combining the retrieved information with its pre-trained knowledge.

- Output refinement (optional) – Some systems improve the response using ranking, confidence scoring, or filtering techniques.

This process helps AI provide more accurate and context-aware responses by grounding them in real, retrievable data rather than relying solely on pre-training.

How to implement RAG

One way to build a RAG system is by using FAISS as the vector store to manage document retrieval and LangChain to structure the workflow. This setup helps keep the system modular and adaptable.

from langchain_community.vectorstores import FAISS

from langchain.embeddings import OpenAIEmbeddings

from langchain.chains import RetrievalQA

from langchain_openai import OpenAI

# Load LLM and embedding model

llm = OpenAI(temperature=0)

embeddings = OpenAIEmbeddings()

# Create FAISS vector store with example documents

vector_store = FAISS.from_texts(["Example document 1", "Example document 2"], embeddings)

retriever = vector_store.as_retriever()

# Create RAG pipeline

qa_chain = RetrievalQA.from_chain_type(llm=llm, retriever=retriever, chain_type="stuff")

# Query the RAG system

response = qa_chain.invoke({"query": "What is RAG?"})

print(response["result"])

What is a Knowledge Graph (KG)?

A Knowledge Graph (KG) connects information like a web, linking people, places, concepts, and their relationships.

Unlike traditional databases that store data in tables, KGs organize knowledge in a way that helps AI understand context, find patterns, and answer complex questions more accurately. They are especially useful for reasoning, inference, and retrieving structured information.

Key components of a Knowledge Graph

- Entities (Nodes): The main building blocks, representing real-world things like people, companies, or ideas (e.g., Elon Musk, Tesla, Neuralink).

- Relationships (Edges): The connections between entities, showing how they relate to each other (e.g., Elon Musk → CEO of → Tesla).

- Attributes (Properties): Extra details about entities or relationships, like Tesla’s market value (market_cap: $1T).

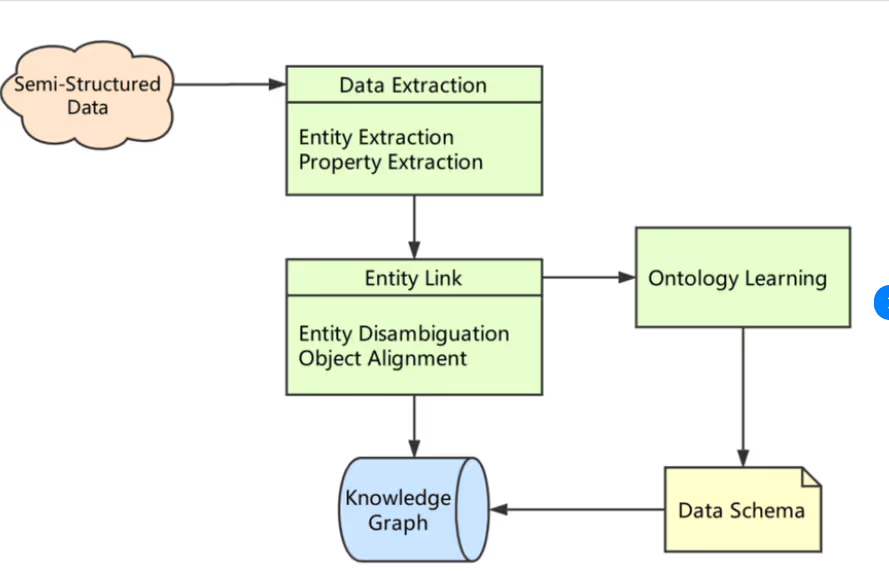

How a Knowledge Graph works

- Collecting Data: Information is gathered from different sources, like databases, APIs, or documents.

- Identifying Entities & Links: AI scans the data to find key people, places, or things and connects them to existing knowledge.

- Storing & Indexing: The graph is saved in a specialized database (e.g., Neo4j, ArangoDB) and organized for quick searches.

- Searching & Querying: Users can ask structured questions using languages like Cypher or SPARQL to find insights.

- Reasoning & Insights: AI uses graph algorithms to uncover deeper connections, resolve ambiguities, and find complex relationships.

Building a simple Knowledge Graph with Neo4j

This example shows how to create a basic Knowledge Graph (KG) using Neo4j. It defines entities (people, companies) and their relationships, then retrieves connected data.

Prerequisites

Before running the code, ensure the following:

- Neo4j is running on localhost:7687.

- Authentication credentials (neo4j, password) match your database.

- Neo4j Python driver is installed (pip install neo4j).

Implementation

from neo4j import GraphDatabase

kg = GraphDatabase.driver("bolt://localhost:7687", auth=("neo4j", "password"))

with kg.session() as s:

s.run("MERGE (p:Person {name:'Elon Musk'}) MERGE (c:Company {name:'Tesla'}) MERGE (p)-[:CEO_OF]->(c)")

print([r.values() for r in s.run("MATCH (p:Person)-[:CEO_OF]->(c:Company) RETURN p.name, c.name")])

kg.close()

Knowledge Graphs vs Semantic Search / Vector Embeddings

Knowledge Graphs (KGs) and Semantic Search / Vector Embeddings are both powerful approaches to representing and retrieving information, but they take fundamentally different approaches to understanding and connecting data.

This experiment compares Traditional RAG, which is fast and retrieves similarity-based results, and Knowledge Graph-Enhanced RAG, which is slower but connects ideas for deeper insights.

How they represent information

Knowledge Graphs

- Store information as a structured network of entities (nodes) and their relationships (edges)

- Explicitly define connections between concepts with labeled, typed relationships

- Provide a clear, interpretable schema of how different pieces of information connect

Semantic Search / Vector Embeddings

- Represent information as dense numerical vectors in a high-dimensional space

- Capture semantic meaning through vector proximity

- Transform text, images, or other data into mathematical representations that capture contextual nuances

Information retrieval mechanism

Knowledge Graphs

- Use graph traversal and structured query languages (like Cypher or SPARQL)

- Perform precise, logic-based lookups across interconnected entities

- Can execute complex multi-step reasoning by following relationship paths

Semantic Search / Vector Embeddings

- Rely on similarity measurements in vector space (cosine similarity, dot product)

- Find closest matches using vector distance calculations

- Perform rapid, approximate nearest neighbor searches

Reasoning capabilities

Knowledge Graphs

- Supports explicit, rule-based reasoning

- Can perform logical inference across complex relationship networks

- Allow for transparent, step-by-step deductive reasoning

- Enable complex queries like "Find all connections between X and Y"

Semantic Search / Vector Embeddings

- Provide implicit, statistical reasoning based on vector similarities

- Capture contextual and conceptual relationships through proximity

- Less transparent in how connections are made

- Rely on learned representations from training data

Handling ambiguity and context

Knowledge Graphs

- Reduce ambiguity by using unique, well-defined entity identifiers

- Allow explicit type and context disambiguation

- Can maintain multiple definitions or contexts for entities

Semantic Search / Vector Embeddings

- Handling ambiguity through contextual vector representations

- Capture nuanced meanings based on the surrounding context

- Can sometimes struggle with highly context-dependent meanings

Scalability and performance

Knowledge Graphs

- Highly efficient for structured, interconnected data

- Performance can degrade with extremely large, complex graphs

- Excellent for domain-specific, relationship-intensive applications

- Require significant upfront modeling and maintenance

Semantic Search / Vector Embeddings

- Extremely fast retrieval, especially with optimized vector databases

- Scale well to massive, unstructured datasets

- Lower computational overhead for initial setup

- Continuous learning is possible through embedding updates

Complementary strengths

While different, Knowledge Graphs and Semantic Search / Vector Embeddings are not mutually exclusive. Advanced systems can combine both approaches:

- Use vector embeddings to generate initial candidate matches

- Leverage knowledge graphs for precise, explainable reasoning

- Create hybrid systems that benefit from both statistical and logical approaches

How Knowledge Graphs make RAG smarter

Knowledge graphs (KGs) help RAG understand information better by organizing data in a structured way, connecting different pieces of information, and making AI smarter at finding answers, reasoning, and understanding relationships between things.

When combined with retrieval-augmented generation (RAG), KGs improve search accuracy, provide better context, and help AI make more logical connections.

Clear and explainable answers

Unlike some AI models that work like a "black box," KGs store information in a way that is easy to trace, which is how AI can explain why it gives a certain answer, and that is very important in fields like healthcare, finance, and law, where trust and transparency matter.

Better reasoning and smart connections

KGs help RAG make sense of information by following connections between related ideas.

For example, if AI is asked about a company’s CEO, a KG ensures it links the right person to the right company, helping it understand indirect relationships, like how two companies might be connected through shared investors.

Faster and more accurate searches

Instead of searching based only on similar words (like vector search does), KGs use structured queries to find precise answers.

Special indexing methods make searches faster, even when dealing with huge amounts of data. When used in RAG, this structured search helps AI find more relevant and accurate information.

More context and richer information

KGs bring together structured data (from databases) and unstructured data (like text and images) to give it a more complete understanding. They also help recognize important connections—like linking a person's name in an article to their profile in a database—so responses are more accurate and meaningful.

Understanding questions better

KGs bring together structured data (from databases) and unstructured data (like text and images) to give it a more complete understanding. They also help recognize important connections—like linking a person's name in an article to their profile in a database—so responses are more accurate and meaningful.

Why combining KGs with RAG makes it better

By adding KGs to RAG, AI can move beyond simple word matching and retrieval.

It understands context better, connects ideas logically, and provides clear, well-structured answers. This combination makes AI more accurate, reliable, and useful in real-world applications.

How knowledge graphs and vector-based RAG work together

A hybrid approach enhances AI-driven knowledge retrieval by combining the flexibility of vector search with the structured reasoning of a knowledge graph (KG).

While RAG retrieves relevant text using vector embeddings, KGs provide explicit entity relationships, improving context understanding, accuracy, and explainability.

By integrating KGs, AI models can resolve ambiguities, perform multi-hop reasoning, and retrieve structured insights, leading to more reliable and interpretable responses.

Step-by-step workflow of hybrid RAG-KG

Step 1: Setup Neo4j & vector database

Vector search for semantic retrieval – FAISS stores document embeddings, enabling fast similarity search using OpenAI or SentenceTransformers.

Neo4j for structured knowledge – Stores entities and relationships, allowing Cypher queries for precise fact retrieval and multi-hop reasoning.

Hybrid retrieval pipeline – Vector search retrieves broad context, while Neo4j enhances accuracy by linking structured knowledge to extracted entities.

from neo4j import GraphDatabase

from langchain.vectorstores import FAISS

from langchain.embeddings.openai import OpenAIEmbeddings

driver = GraphDatabase.driver("bolt://localhost:7687", auth=("neo4j", "password"))

embeddings = OpenAIEmbeddings()

vector_db = FAISS.load_local("faiss_index", embeddings)

Step 2: Retrieve relevant documents (vector search)

Query embedding & vectorization – Converts the input query into a dense vector using a pre-trained model (e.g., OpenAI, SentenceTransformers) to represent semantic meaning.

Approximate nearest neighbor (ANN) search – Compares the query vector against a high-dimensional index in the vector database (e.g., FAISS, ChromaDB) using algorithms like HNSW or IVF for efficient retrieval.

Top-k document ranking – Retrieves the most relevant documents based on similarity (cosine similarity, L2 distance), providing unstructured context for further entity extraction and knowledge graph retrieval.

def vector_search(query):

return vector_db.similarity_search(query, k=2)

Step 3: Extract entities & query the knowledge graph (KG)

Entity extraction via NER – Named Entity Recognition (NER) models identify key entities (e.g., persons, organizations, concepts) from retrieved text, converting unstructured data into structured knowledge.

Graph-based entity lookup – Extracted entities are queried in Neo4j using Cypher, retrieving direct facts and explicit relationships for factual grounding.

Multi-hop graph traversal – Expands beyond single-node lookups to explore interconnected entities (e.g., MATCH (a)-[*1..N]->(b)), uncovering indirect relationships and enriching context.

Recursive path expansion – Dynamically traverses hierarchical structures (e.g., supply chains, affiliations, citations) to extract deeper, multi-contextual insights.

import spacy

from neo4j import GraphDatabase

driver = GraphDatabase.driver("bolt://localhost:7687", auth=("neo4j", "password"))

nlp = spacy.load("en_core_web_sm")

def extract_entities(text):

return [ent.text for ent in nlp(text).ents]

def query_kg(entity):

with driver.session() as session:

return session.run("MATCH (n) WHERE n.name = $e RETURN n", e=entity).data()

def multi_hop_query(entity):

with driver.session() as session:

return session.run("MATCH (a)-[*1..2]->(b) WHERE a.name = $e RETURN a, b", e=entity).data()

Step 4: Integrate retrieved knowledge & refine context

Merging structured & unstructured data – Combines KG-retrieved facts with vector search text results to create a cohesive knowledge representation.

Context filtering & relevance scoring – Ranks extracted entities and documents based on their semantic fit and factual alignment with the query.

Multi-hop reasoning validation – Ensures retrieved facts maintain logical consistency, discarding contradictory or low-confidence information before final generation.

Step 5: Generate final answer using LLM

Context fusion from hybrid retrieval – Combines vector search results (unstructured text) with Neo4j knowledge graph data (structured facts) to improve semantic relevance and factual accuracy.

Prompt engineering for optimal LLM input – Structures retrieved information into a well-defined prompt, incorporating multi-hop reasoning to guide the LLM toward coherent, context-aware responses while reducing hallucinations.

LLM-based response synthesis – Uses a language model (GPT-4, Claude, or Llama) to generate the final response, integrating retrieved documents and structured KG knowledge for improved accuracy, depth, and logical consistency.

from langchain.chat_models import ChatOpenAI

llm = ChatOpenAI(model_name="gpt-4")

def generate_response(query):

docs = vector_search(query)

entities = extract_entities(docs[0].page_content)

kg_data = [query_kg(entity) for entity in entities]

context = f"Documents: {docs}\nKnowledge Graph: {kg_data}\n\nQuery: {query}"

return llm.predict(context)

Why this hybrid approach works

A hybrid RAG-KG model works because it combines flexibility with structured reasoning. Knowledge graphs resolve ambiguity by clearly defining relationships, preventing mistakes that can happen with vector-based retrieval alone.

They add depth to responses by connecting related concepts instead of relying just on keyword matching. Every fact is linked to a source, making AI outputs more transparent and easier to verify.

By mixing RAG’s ability to find diverse content with KG’s structured logic, this model makes AI responses more accurate, reliable, and easy to understand.

To sum up

Experimenting with AI and building POCs—sometimes for clients, sometimes just because we’re curious—is part of our daily work. My colleagues and I were talking about how AI retrieves information. RAG does a great job pulling in relevant text, but without a way to verify or connect it, context gets lost.

Knowledge Graphs, on the other hand, structure relationships and ground facts, making information more reliable. So, we started testing what happens when you bring them together. A hybrid approach merges RAG’s ability to gather context with KG’s ability to organize and validate it.

We’ve been exploring this approach, and seeing how by integrating RAG’s contextual retrieval with knowledge graphs structured validation, AI not only finds information for you but it also understands and explains it.

This hybrid approach enhances accuracy, strengthens trust, and makes AI- driven insights more reliable in high-stakes fields like healthcare, finance, and legal AI.

Inspiration:

WorldofAI, HybridRAG: Ultimate RAG Engine - Knowledge Graphs + Vector Retrieval! Better Than GraphRAG

.avif)